With the recent relaunch of my now static site I’ve had a lot of fun with cache expiry and dealing with CloudFront as a CDN. Here’s a small cache of wisdom I’ve gleamed from setting it up!

Refresher on server/cdn cache

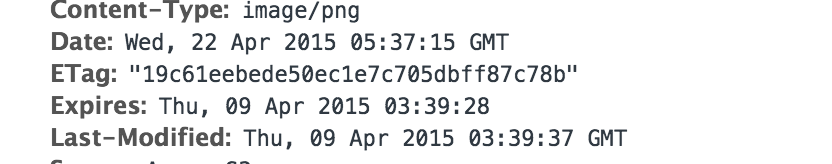

There’s an excellent summary of caching headers in Amazon’s documentation. In short:

- If your origin serves up a Cache-control: max-age= header, CloudFront origin will check every

- If your origin serves up an Expires: header, CloudFront will check origin every request after has passed.

###Refresher on browser cache If your local cached copy of a resource is unchanged, the server may reply with a 304 ‘not changed’ code. This is important since the resource itself doesn’t have to be downloaded again. You can use ETag’s too as a sort of checksum or hash of the local asset so that the server (or CDN in this case) can decide between a 200 with new content or a 304 with no content. A web browser also respects the same rules as the Expires header (above).

Traditionally we use a CDN in front of our application, so our app/cms/framework of choice can handle the correct cache headers. Since we serve up new content, we can simply cache-bust the assets (you know, change the

As a side note I’d like to clarify from an AWS point of view that I only use CloudFront to push down my SSL for developerjack.com. If, like most people you’re in no dire need for the SSL then serving straight up from the S3 bucket with a Route53 record will work just fine.

Luckily, s3 buckets serve up their metadata as http headers when website enabled! By pushing some additional fields with my site deployments I can configure the CDN as I like it. The Cache-Control header is the key here, since it’s always relative to the request time (and not an absolute and therefore static datestamp time as in the Expires header). I emphasise this point profusely with static sites!

For now, everything is an hour’s cache. That’s cool, works for me! I’m hardly high traffic!

In future, I’ll slowly increase cache times for assets or pages that are older. For example:

- my homepage and tag pages might only get an hours cache

- blog posts older than a week might get a 24 hour cache

- blog posts older than a fortnight might get a week’s cache

- and so on!

Remember if I really need, I can always push a CDN invalidation for longer caches and that’s only a 15 minute propagation time.

I plan to be able to inspect the last modified time on the .md files via git, but there’s a little scripting to be done there.

For now, I’ve put together a HorribleHacktm that redeploys (incl. meta tags) off my CodeShip deploy. You can see the details here.

P.S. You may notice me checking out a specific version of s3cmd, that’s because I’ve pre-vetted it. Don’t want an awry PR publishing my environment variables and giving access to the wrong people now do I!

Add comment