OpenAPI is an excellent tool for defining an API, yet its often treated as just a documentation tool. In this post, I’ll explore how an OpenAPI spec can drive many parts of your API lifecycle.

The API

The API I’m building in this post is a simple echo API. You call GET /echo?echo=<message> and the response will echo back the message you pass in. If you want to skip straight to end, you can go ahead and get the code from github or call the API:

curl -X GET \

'https://echo.services.developerjack.com/echo?echo=example' \

-H 'Accept: application/json'

Alternatively, you can:

Mocking a new API

To begin with, an API spec is a fantastic tool for early prototyping of an API. It helps us define a schema, ways to interact with resources, pretty much all the foundational API ‘things’ we need.

There are plenty of mocking tools out there, though I’ve been using SwaggerHub of late. Here’s a spec I created earlier. There’s no code behind this – but you can go right ahead and call the examples in the browser using SwaggerHubs mock API generator.

Because you don’t have to write code, using an API spec can shorten the time for feedback on your new API from days or hours, to merely minutes. I wrote and deployed this example in under 5 minutes.

Implementing an API

If you’re using a spec to get early feedback on your API design, you’re more likely to have a stable(ish) API definition when it times to comes to implementing it.

An API spec can also be useful in planning. You have an upfront list of endpoints, resources, verbs etc. that you can use to slice tasks and take a more methodical approach.

Code Generation

Personally I’m not a fan of code generation but it can be an extremely useful tool, especially if you want to build a quick prototype.

In this post I’ll be implementing the API in python, but there are plenty of code gen (server) tools out there for API specs.

pip install swagger_py_codegen

swagger_py_codegen -s openapi.yml . -p echo

To make this work for the spec I’ve then gone and modified the /v1 prefix in the blueprint, and the echo/v1/api/echo.py file to implement ?echo.

Custom implementation

For other services (or perhaps for an existing service) you’ll have to cut code manually. Thats what I’ve done in api.py which is about 20 lines of code.

Testing your API implementation

This is where API specs are incredibly powerful tools for the development part of your API lifecycle. Importantly, I’m maintaining an API spec alongside my implementation and not generating the API spec from annotations. By doing so I have a contract to test against, rather than testing my reference implementation of the API. I’ll explain this in a little more depth later.

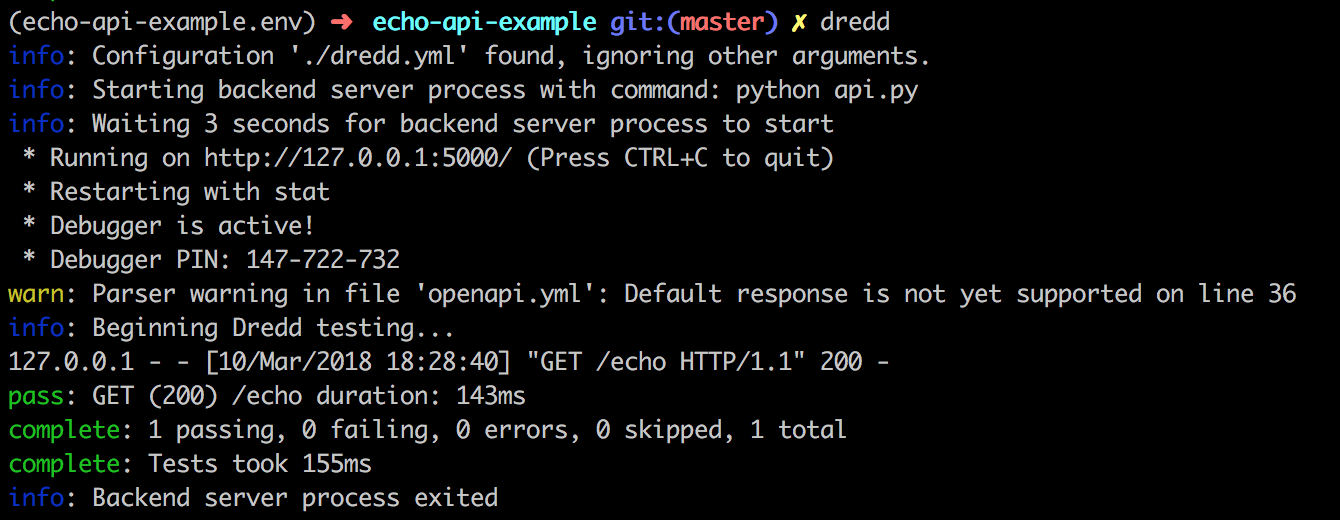

dredd is an excellent tool for testing APIs against the spec – and it works for OpenAPI specs too! We can go ahead and get it setup pretty quickly.

npm install -g dredd

dredd init

dredd

This gives me some handy checks that the code I’ve implemented behave in a way that reflects the API specification I’ve written. That is to say, it matches the ‘contract’ of my API design.

Further testing with Postman

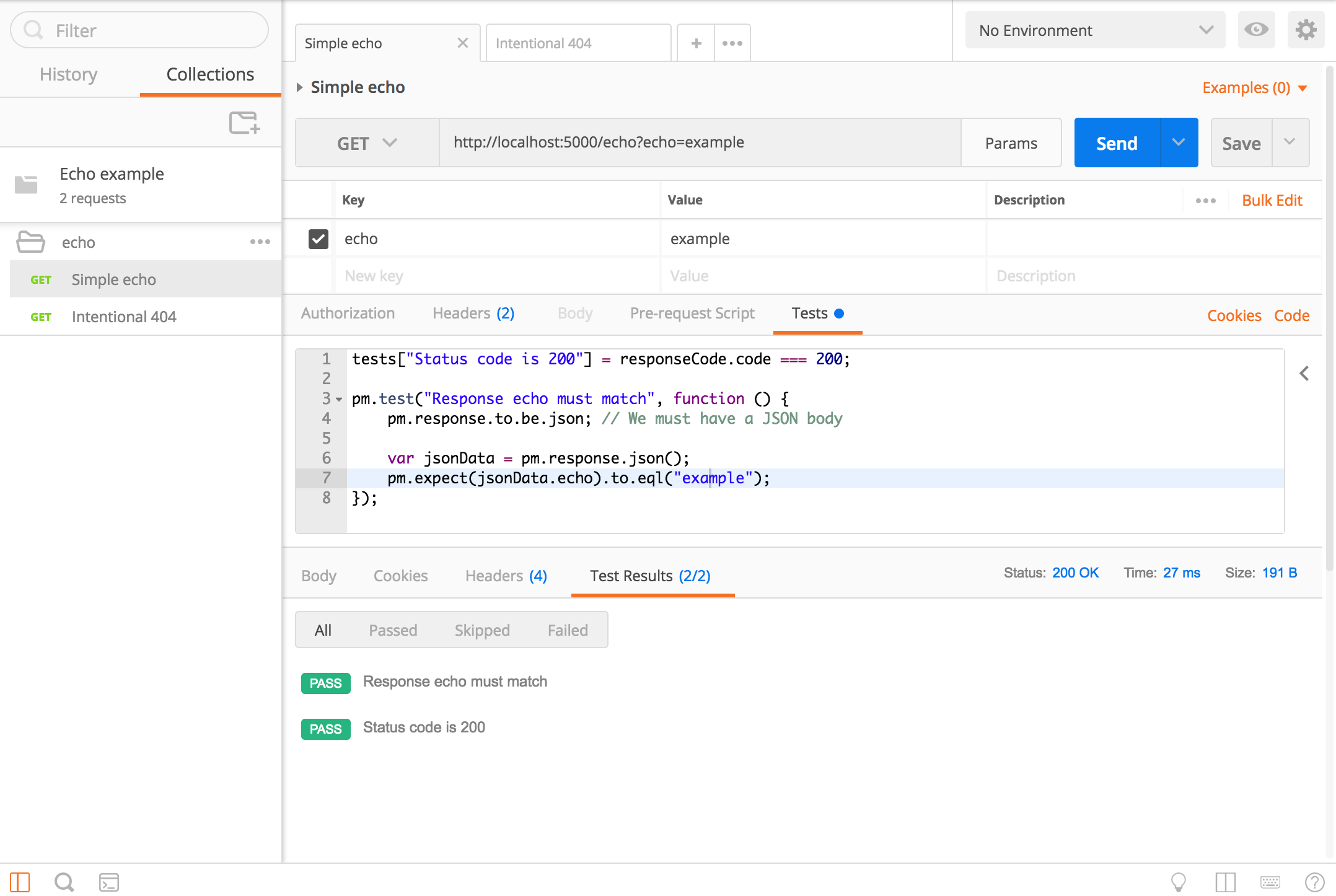

Postman is a super handy API tool – if you’re not already using it go grab it now!

You can import an OpenAPI spec to postman and generate a collection. However for this API I’ve already done that for you in echo-example.postman_collection.json.

This is super handy because you can then write more detailed tests against API calls. Each test is crafted using some simple JavaScript and is shown in the ‘tests’ tab of the response.

For more reading, I’d highly recommend reading this excellent collection of tips for API testing. I’d also recommend exploring postman environments as a way to maintain a collection thats flexible and configurable for different stages of your development.

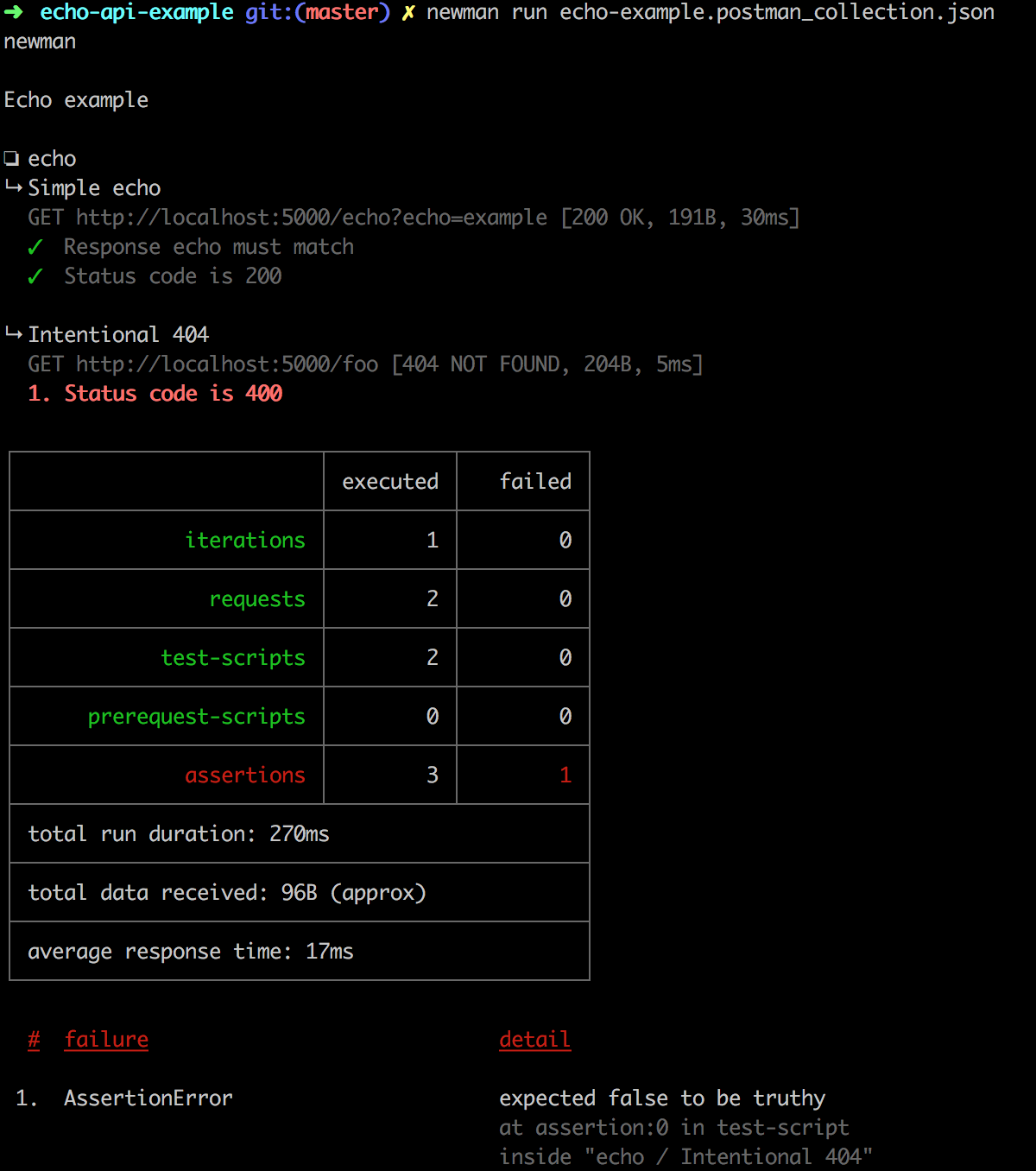

Once saved to disk, that postman collection can now be run on the command line using newman.

npm install -g newman

newman run echo-example.postman_collection.json

And the output of newman gives me a summary of the tests that passed/failed.

In this example, I needed to go back and ensure that my 404 responses were returning the correct HTTP error code.

Tests for different purposes

Dredd, allows me to make sure that my implementation of the API matches the contract I’m maintaining (i.e. the spec). and visa versa. This means other tools using the spec such as SDK and documentation generation will work with my API.

Postman collection tests allow me to check the implementation of the API and ensure features such as the echo param are behaving correctly.

It’s not too far-fetched to consider Postman collection testing as a sort of integration test for your API, while the dredd spec testing ensures backwards compatibility (or at least flags changes in the contract).

Documentation generation

Finally we arrive at documentation and I won’t dwell too much on docs since its the possibly the most popular usage of an API spec.

For this echo example API I’m going to use redoc to generate some basic documentation. There’s an excellent snippet of code over on apis.guru and I’ll serve it up from the flask API as per this commit.

That means you can head on over to https://echo.services.developerjack.com/docs and explore the documentation thats been generated.

I’m not specifically advocating serving your docs from your API – its just convenient to do in this example. Docs generated from your OpenAPI spec shouldn’t be considered the only docs you need but they’re a good start to explain what your API is.

Your next documentation step should be to explore how to use your API.

Client generation

At this point I’ve mocked and API, built it, tested it and documented it. Now it’s time to get someone else consuming our API.

cURL

For starters, most swagger UI based tools (such as SwaggerHub used for mocking earlier) also have a cURL command generator as part of their feature set. For example, I’ve republished the spec as v1.0.0 (to define host as the production URI in the specification) and its generated this command:

curl -X GET "https://echo.services.developerjack.com/echo?echo=This%20is%20a%20sample%20string" -H "accept: application/json"

SDKs

For most languages you can go ahead and use something like swagger-codegen which also has a handy docker image you can use.

docker pull swaggerapi/swagger-codegen-cli

mkdir sdk

docker run -v ${PWD}:/local \

swaggerapi/swagger-codegen-cli generate \

-i https://raw.githubusercontent.com/devjack/echo-api-example/master/openapi.yml \

-l python \

-o /local/sdk/python

All going well, we should now have a python SDK in ./sdk/python. If you’re publishing a package for your API, you might want to maintain this seperately. For the purposes of this example I’ve gone ahead and committed it to the repo so that the client.py can use it. I also went and renamed the SDK that was generated to echo-example-swagger-client so that, if I wanted to, I could publish this package up to pypi. Locally, I just ran pip install . in the SDK directory.

Sample API clients

Not only is an SDK useful for clients, but code samples for that SDK are also generated!

Here’s a sample API client (you’ll find it in client.py and it looks a little like this:

from __future__ import print_function

import time

import swagger_client

from swagger_client.rest import ApiException

from pprint import pprint

# create an instance of the API class

api_instance = swagger_client.DefaultApi()

echo = 'echo_example' # str | A string to echo back. (optional)

try:

api_response = api_instance.echo(echo=echo)

pprint(api_response)

except ApiException as e:

print("Exception when calling DefaultApi->echo: %s\n" % e)

Not only is this SDK generated for me, but its based on the API spec I’ve already tested with dredd so I know its going to work.

Generating API Specs

Lots of folks I chat to are particularly keen on generating their API spec from the implementation. There are heaps of libraries that can make this super easy as well, simply add annotations to your routing layer and serialisation/views and you can have a spec at your fingertips in minutes.

On the surface, this is an excellent idea. Your API spec is always up to date and reflects the current API. However in my mind this presents an antipattern on how to best use an OpenAPI spec to your advantage.

Firstly, you can’t use the spec for early prototyping and feedback. Instead, you would have to actually implement parts of your API routing and serialisation before you can get a complete spec to show people.

Secondly, you would only ever have a document indicating the reference implementation of your API. You’re limited in being able to break out your API into smaller components because of the tight coupling between code and the spec generated.

If you generate your API spec from the code implementation, then automated testing would be checking the implementation and not the interface – and thats a terrible test design.

By contrast, maintaining a spec alongside your API means you have a clear distinction between API contracts and the implementation that drives them.

With all this said, if you only use spec generation for scaffolding then this can be a kick start to crafting a spec that you maintain side by side with your API – but use it sparingly and wisely.

API specs are not just for docs

An API spec, and specifically the OpenAPI spec, is a hugely powerful tool in our API development arsenal. Crafted and maintained correctly, a spec can be used at every step of your API lifecycle from ideation and early prototyping, to implementation, testing, generating client code and, yes, even documentation!